EMOPIA

EMOPIA (pronounced ‘yee-mò-pi-uh’) dataset is a shared multi-modal (audio and MIDI) database focusing on perceived emotion in pop piano music, to facilitate research on various tasks related to music emotion. The dataset contains 1,087 music clips from 387 songs and clip-level emotion labels annotated by four dedicated annotators. Since the clips are not restricted to one clip per song, they can also be used for song-level analysis.

The detail of the methodology for building the dataset please refer to our paper.

Example of the dataset

| Low Valence | High Valence | |

| High Arousal |

|

|

| Low Arousal |

|

|

Number of clips

The following table shows the number of clips and their average length for each quadrant in Russell’s valence-arousal emotion space, in EMOPIA.

| Quadrant | # clips | Avg. length (in sec / #tokens) |

|---|---|---|

| Q1 | 250 | 31.9 / 1,065 |

| Q2 | 265 | 35.6 / 1,368 |

| Q3 | 253 | 40.6 / 771 |

| Q4 | 310 | 38.2 / 729 |

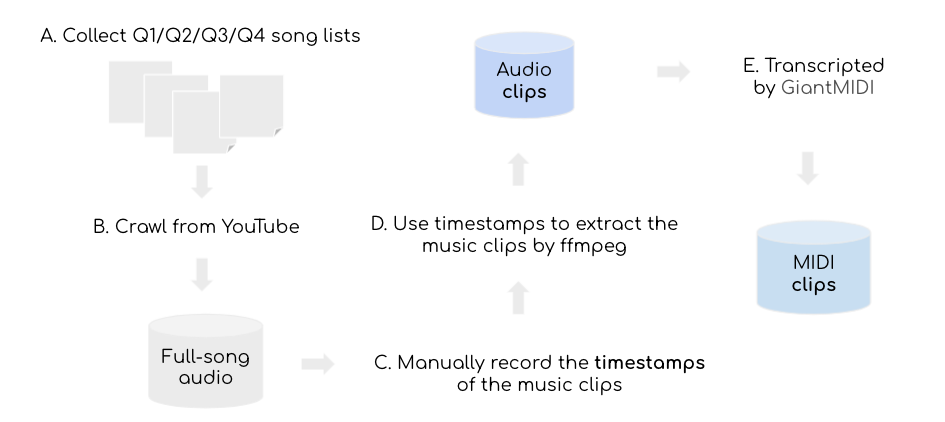

Pipeline of data collection

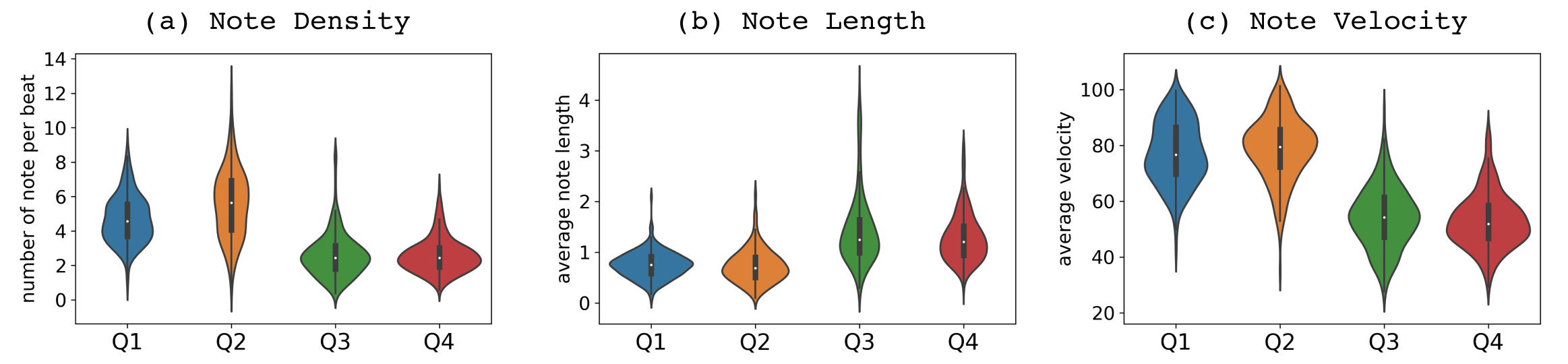

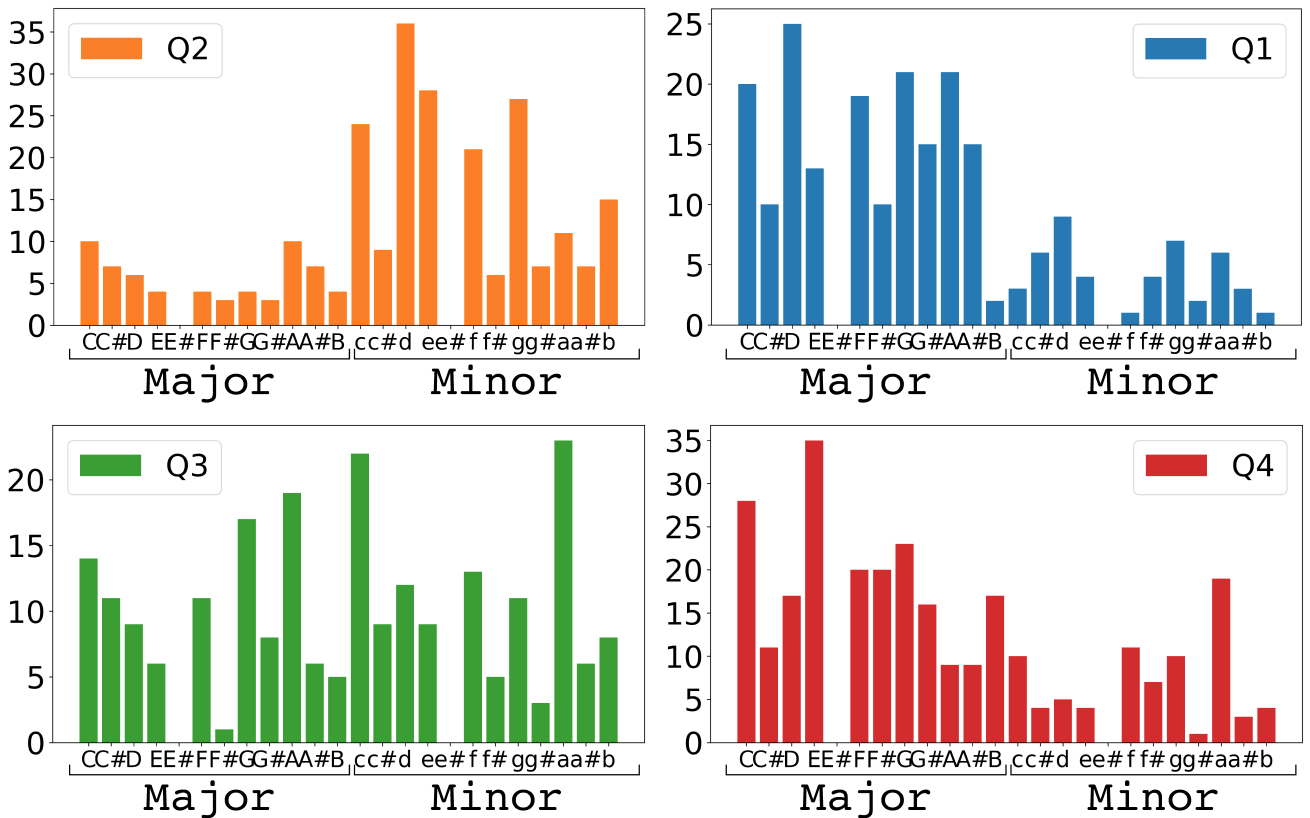

Dataset Analysis

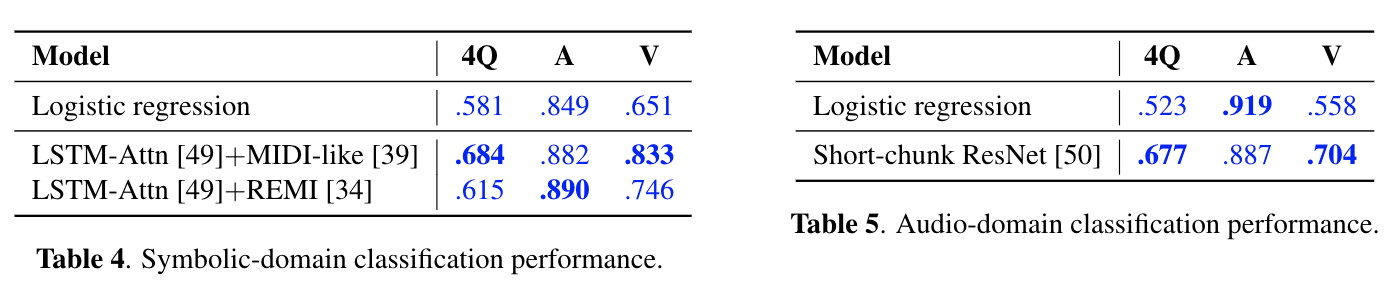

Emotion Classification

-

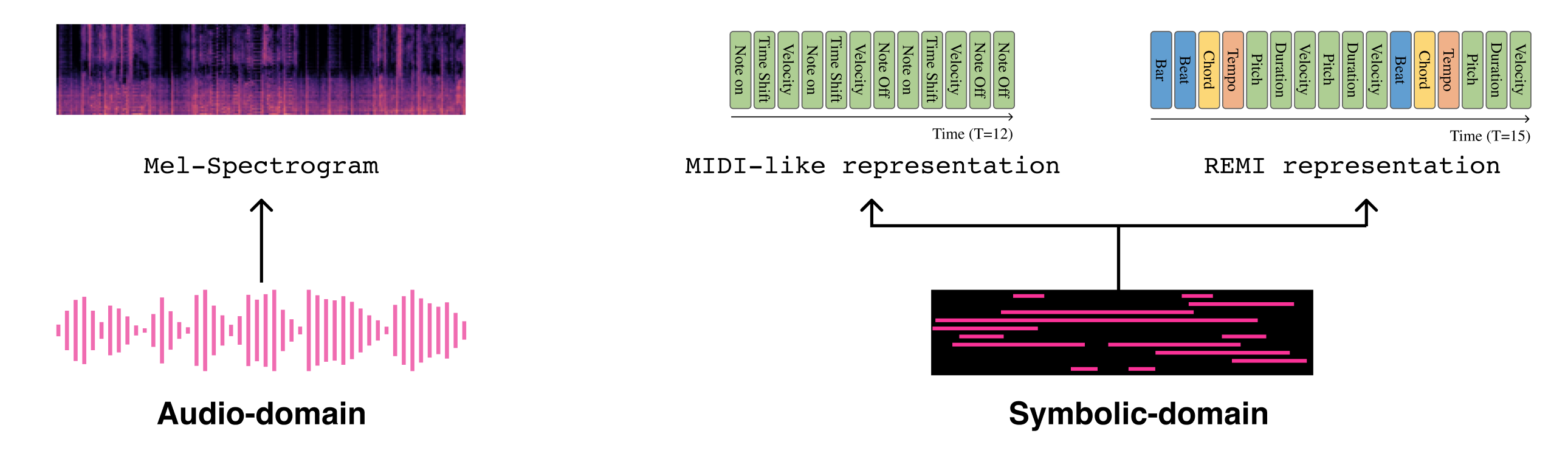

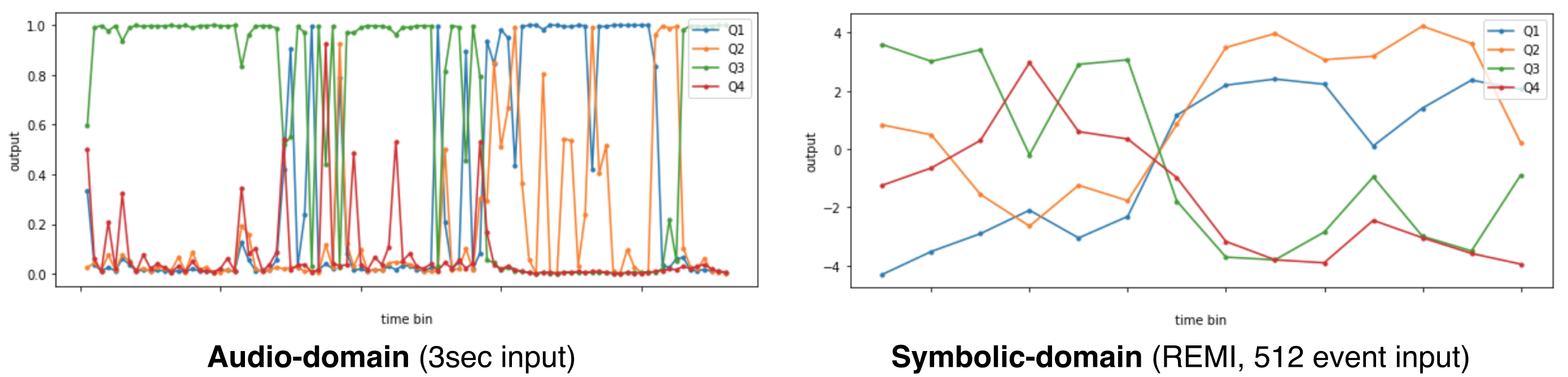

We performed emotion classification task in audio domain and symbolic domain, respectively.

-

Our baseline approach is logistic regression using handcraft features. For the symbolic domain, note length, velocity, beat note density, and key were used, and for the audio domain, an average of 20 dimensions of mel-frequency cepstral co-efficient(MFCC) vectors were used.

- The input representation of the deep learning appraoch uses mel-spectrogram for the audio domain and midi-like and remi for the symbolic domain.

- We adopt the A Structured Self-attentive Sentence Embedding for symbolic-domain classification network, and adopt the Short-chunk CNN + Residual for audio-domain classification network.

- An inference example is Sakamoto: Sakamoto: Merry Christmas Mr. Lawrence. The emotional change in the first half and second half of the song is impressive. The front part is clearly low arousal and the second half turns into high arousal. Impressively, audio and mid classifiers return different inference results.

For the classification codes, please refer to SeungHeon’s repository.

The pre-trained model weights are also in the repository.

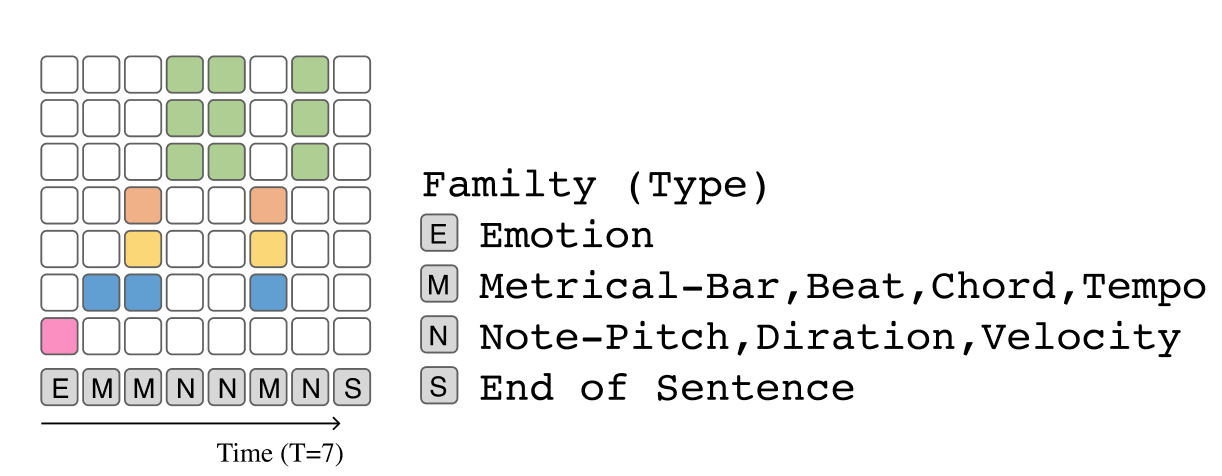

Conditional Generation

-

We adopt the Compound Word Transformer for emotion-conditioned symbolic music generation using EMPOIA. The CP+emotion representation is used as the data representation.

-

In the data representation, we additionally consider the “emotion” tokens and make it a new family. The prepending approach is motivated by CTRL.

-

As the size of EMOPIA might not be big enough, we use additionally the AILabs1k7 dataset compiled by Hsiao et al. to pre-train the Transformer.

-

You can download the model weight of the pre-trained Transformer from here.

-

The following are some generated examples for each Quadrant:

Q1 (High valence, high arousal)

| Baseline | |||

| Transformer w/o pre-training | |||

| Transformer w/ pre-training |

Q2 (Low valence, high arousal)

| Baseline | |||

| Transformer w/o pre-training | |||

| Transformer w/ pre-training |

Q3 (Low valence, low arousal)

| Baseline | |||

| Transformer w/o pre-training | |||

| Transformer w/ pre-training |

Q4 (High valence, low arousal)

| Baseline | |||

| Transformer w/o pre-training | |||

| Transformer w/ pre-training |

Authors and Affiliations

-

Hsiao-Tzu (Anna) Hung

Research Assistant @ Academia Sinica / MS CSIE student @National Taiwan University

r08922a20@csie.ntu.edu.tw

website, LinkedIn. -

Joann Ching

Research Assistant @ Academia Sinica

joann8512@gmail.com

website -

Seungheon Doh Ph.D Student @ Music and Audio Computing Lab, KAIST

seungheondoh@kaist.ac.kr

website, LinkedIn -

Juhan Nam associate professor @ KAIST juhan.nam@kaist.ac.kr

Website -

Yi-Hsuan Yang

Chief Music Scientist @ Taiwan AI Labs / Associate Research Fellow @ Academia Sinica

affige@gmail.com, yhyang@ailabs.tw

website

Cite this dataset

@inproceedings{

{EMOPIA},

author = {Hung, Hsiao-Tzu and Ching, Joann and Doh, Seungheon and Kim, Nabin and Nam, Juhan and Yang, Yi-Hsuan},

title = {{EMOPIA}: A Multi-Modal Pop Piano Dataset For Emotion Recognition and Emotion-based Music Generation},

booktitle = {Proc. Int. Society for Music Information Retrieval Conf.},

year = {2021}

}